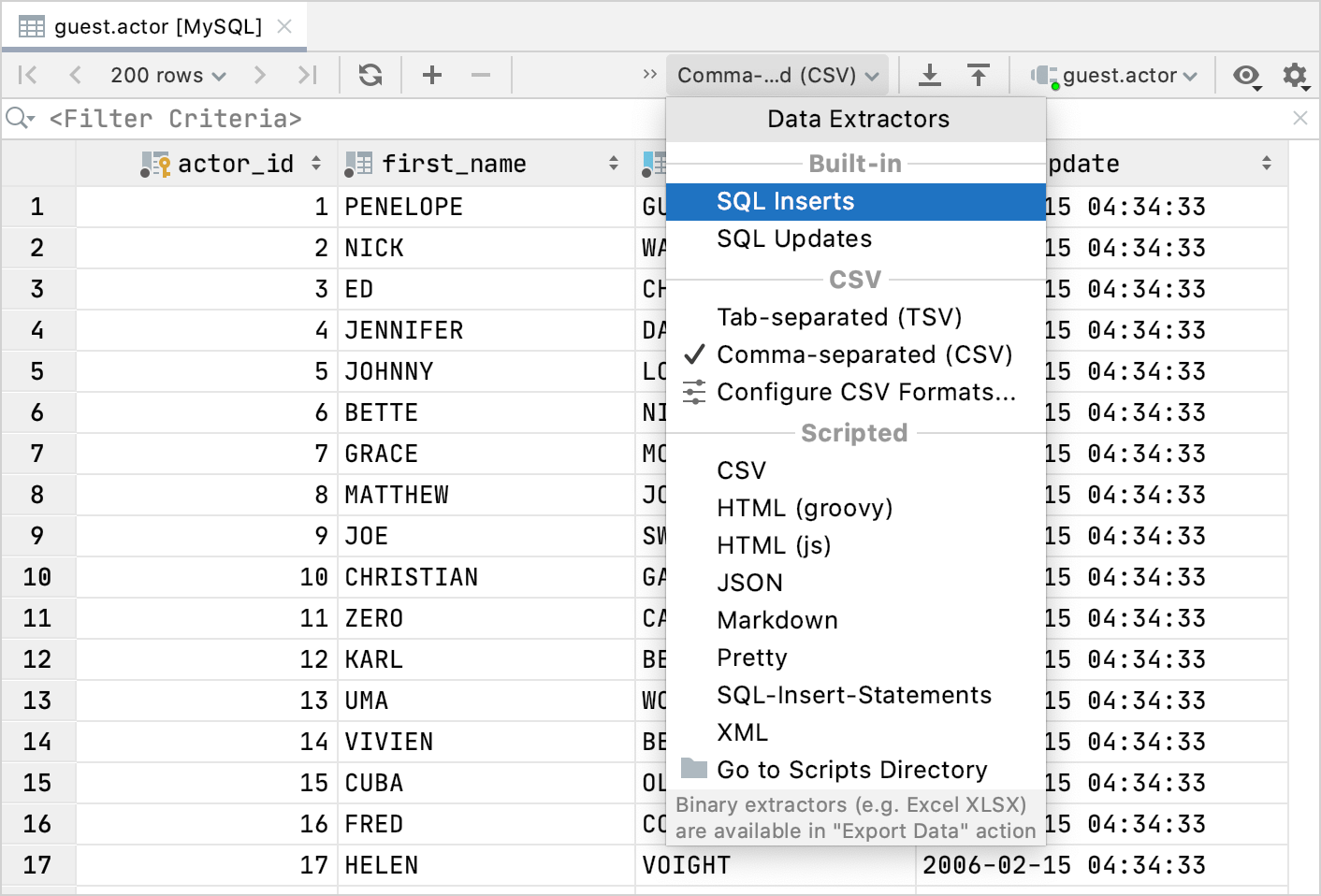

You can also customize the output using the configuration vars at the top of the file, but the defaults should work well for most people. That's it! You now have access to exporting rows as batches. Create a new file called "SQL Batch Multi-Line " (The double extension is important for syntax highlighting).Additionally, in most cases, the difference in performance is very minor between batched inserts and 1 large insert. Allowing normal queries to run at the same time as the set of batched queries. Inserting in batches gives you a significant performance boost, while still preventing tables or rows from getting locked for too long. This is particularly harmful in production environments, especially when you need to comply with an SLA. Table & row locks block all other queries that modify data (causing a desync) and even some queries that read data (causing request lag). One large insert is technically faster than batched inserts however, this often comes at the cost of locking tables/rows for extended periods of time. This makes the individual inserts very slow, while batches avoid almost all of this overhead. In SQL, each query is parsed and executed separately which causes significant overhead between each query. In short, better performance and limited table/row locks when inserting thousands, millions, or even billions of rows.īatches perform orders of magnitude faster than individual inserts. I will also take a moment to say this is my first post and would love any feedback on improving it.

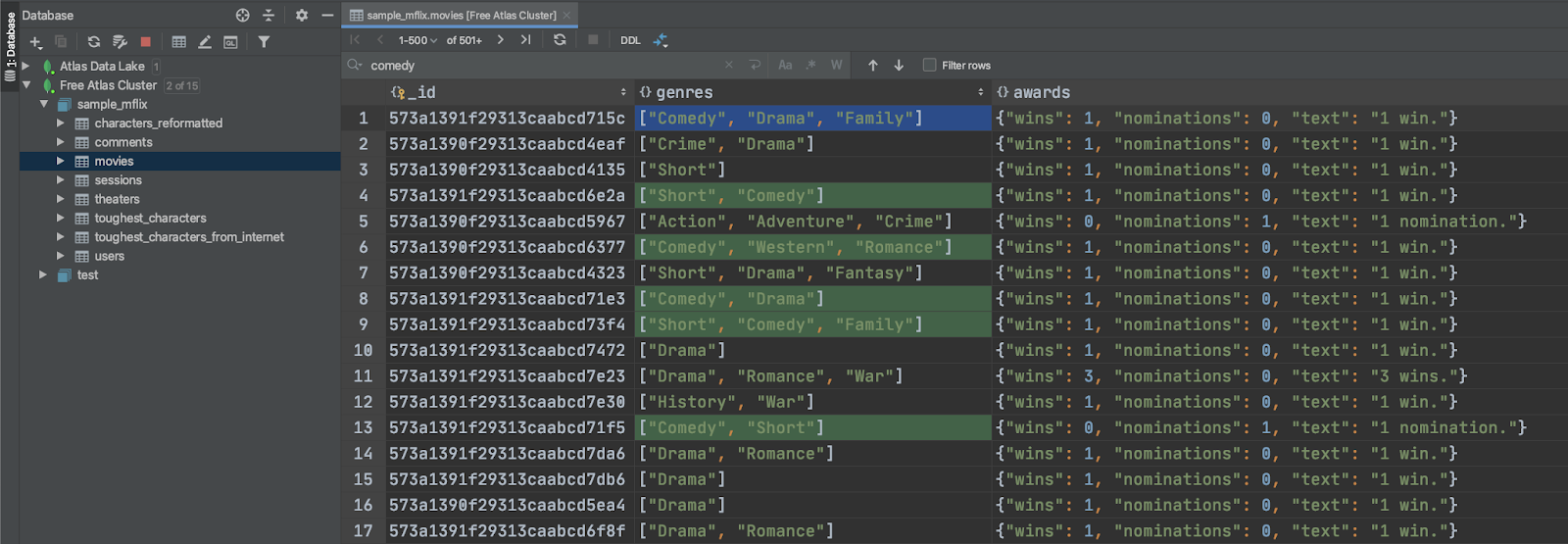

I copy the data into a spreadsheet which already have the column names and I find it really annoying when I copy the data, and the column names tag along as well. One of the things I'd like is to copy the data from the resultset without copying the column names. While I could go on with my love/hate relationship with DG's features, (it's mostly love) it is fortunately easy enough to manually add some functionality. I'm using DataGrip, having switched from DBeaver a few days ago. So, in order to conserve machine resources and memory, we just run the query again and write the data directly to the destination and bypass the data grid.This isn't so much a "great new feature" as it is a missing feature from DataGrip. Some query result sets are larger than others, and not all of us have 64 bit monsters to run our tools on. If you do force all of the rows back into the grid, you are going to be consuming a decent amount of memory. When you work with databases, you’re exploring the data contained in them. It supports PostgreSQL, MySQL, SQL Server, Oracle, MongoDB, and many other databases. This will force the rest of the records back into the grid.Īnd now you will see something like this Fetch time can often exceed execution time! Now with all records returned, Export will use the data in the grid instead of re-querying the database.Ĭlear as mud? But WHY does SQL Developer force the 2nd query execution? JetBrains DataGrip is a universal tool for dealing with databases. So how do you get the rest of the records? The first fetch is often sufficient to answer most questions, but. What’s really most important to understand here is that that many of your queries return MORE data than what is shown on the initial display of the results grid. We consider this a low-level preference that shouldn’t be tweaked unless explicitly advised to do so by Oracle Support. You can read more of the JDBC nitty-gritty here if you’re morbidly curious. This means that SQL Developer will by default return the first 100 rows of your query. The default is ‘100.’ Fetch! SQL Developer ALWAYS comes back with the stick 🙂 The size of the batch is dependent on what you have ‘Sql Array Fetch Size’ set to. What good is running a query without seeing the results? However this last phase is what is known as a ‘fetch.’ Records are retrieved in batches. You asking for the data is usually an assumed part of running the actual query. The database tells the requestor that their query has executed. You don’t really care about how it gets your data, but it’s there to see in the plan. We provide a beautiful, modern SQL editor for data focused teams looking to. It may spawn 1 or more processes to get the data, do all the sorts, merges, etc. PopSQL is the evolution of legacy SQL editors like DataGrip, DBeaver, Postico. Hopefully this shouldn’t take but a millisecond or two. If it doesn’t already have one cached, it will build a new one. The database engine parses the query and identifies an execution plan. default folder in /Users/USERNAME/Library/Preferences/DataGrip2018.1/projects contains database connection properties. Running a query in Oracle has several distinct steps. What the heck is he talking about, fetched? The solution is to ensure all of the data has been fetched into the grid before you ask for the Export. You ask SQL Developer to ‘Export.’ SQL Developer seems to start over by running the query again. You wait patiently for your query and results to return.

0 kommentar(er)

0 kommentar(er)